At Dover, one metric we closely track for every customer is how many potential passive candidates are interested in talking to a company (# of interested responses / # of total responses to outbound emails). A few months ago, we did a big push company-wide to see if we could increase interest rates across our customer base.

We had a lot of theories as to what caused low interest rate: Was it the Great Resignation/Reshuffle, unclear job descriptions, or a weak employer brand? Was it bad timing? Was it a bad email? The reality was that it was probably a combination of all these things, and with inbound applications decreasing across the board, we needed to focus on engaging passive talent to provide the most value to our customers.

At Dover, one metric we closely track for every customer is how many potential passive candidates are interested in talking to a company (# of interested responses / # of total responses to outbound emails). A few months ago, we did a big push company-wide to see if we could increase interest rates across our customer base.

We had a lot of theories as to what caused low interest rate: Was it the Great Resignation/Reshuffle, unclear job descriptions, or a weak employer brand? Was it bad timing? Was it a bad email? The reality was that it was probably a combination of all these things, and with inbound applications decreasing across the board, we needed to focus on engaging passive talent to provide the most value to our customers.

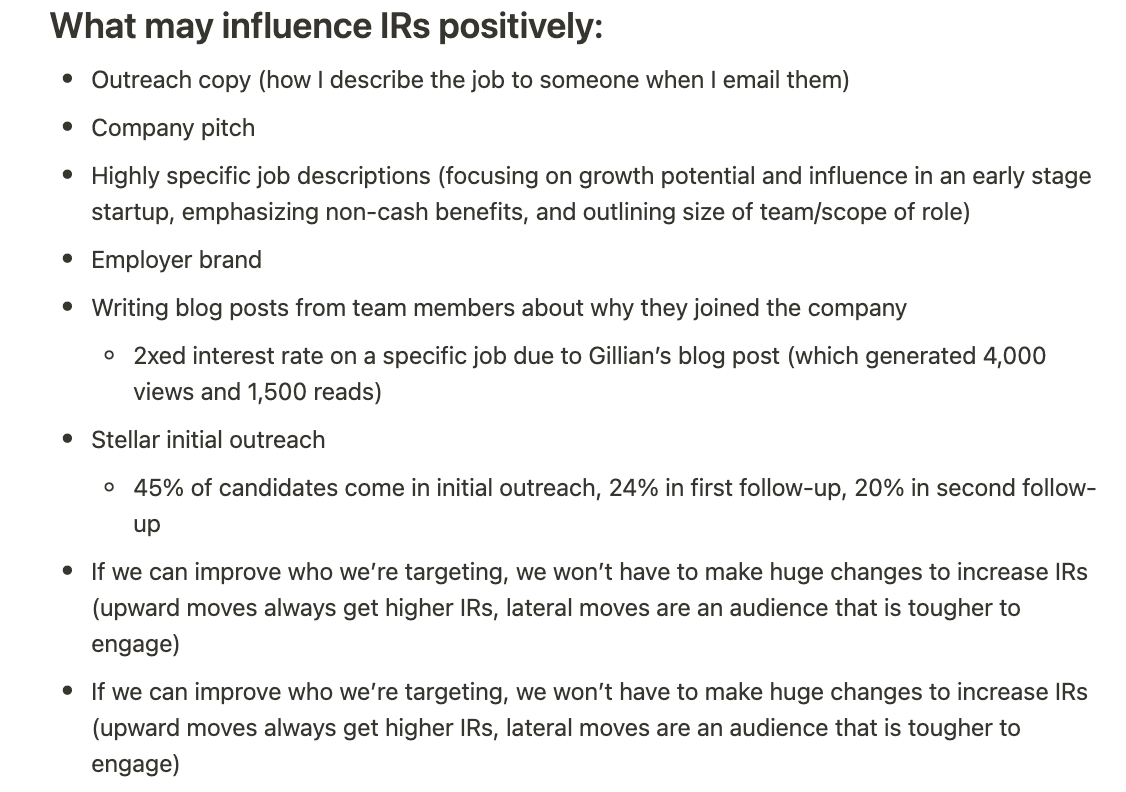

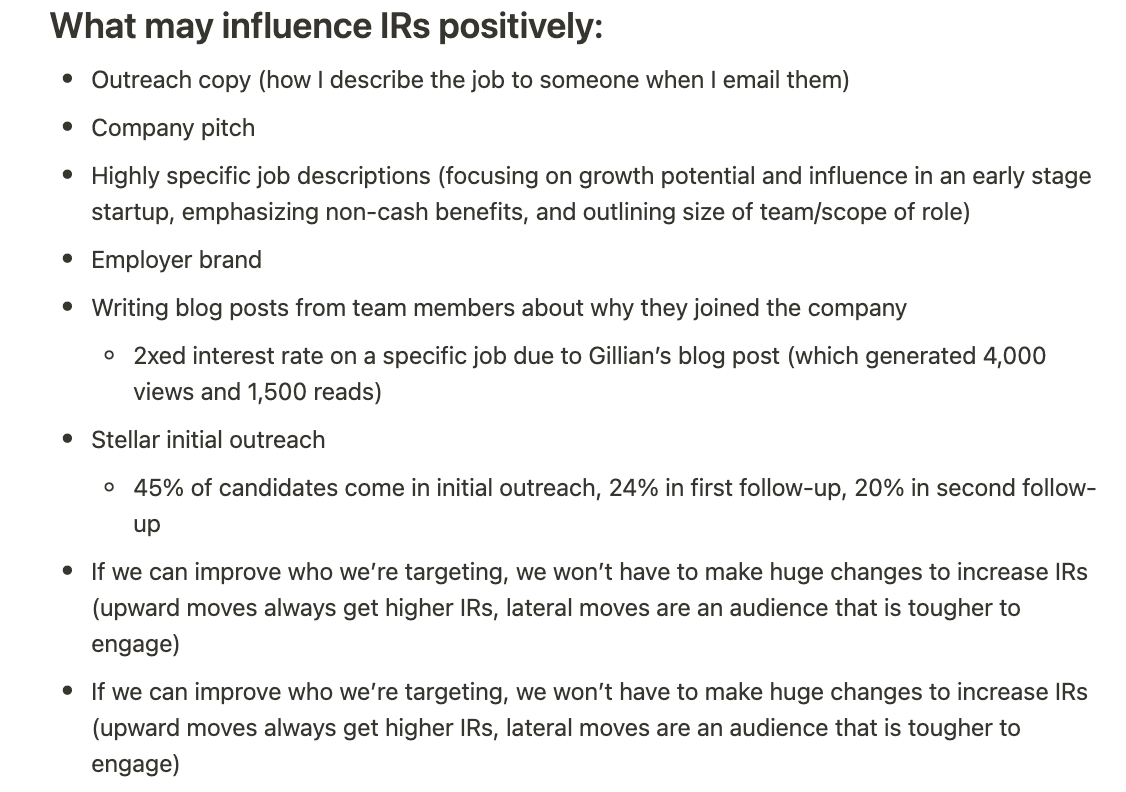

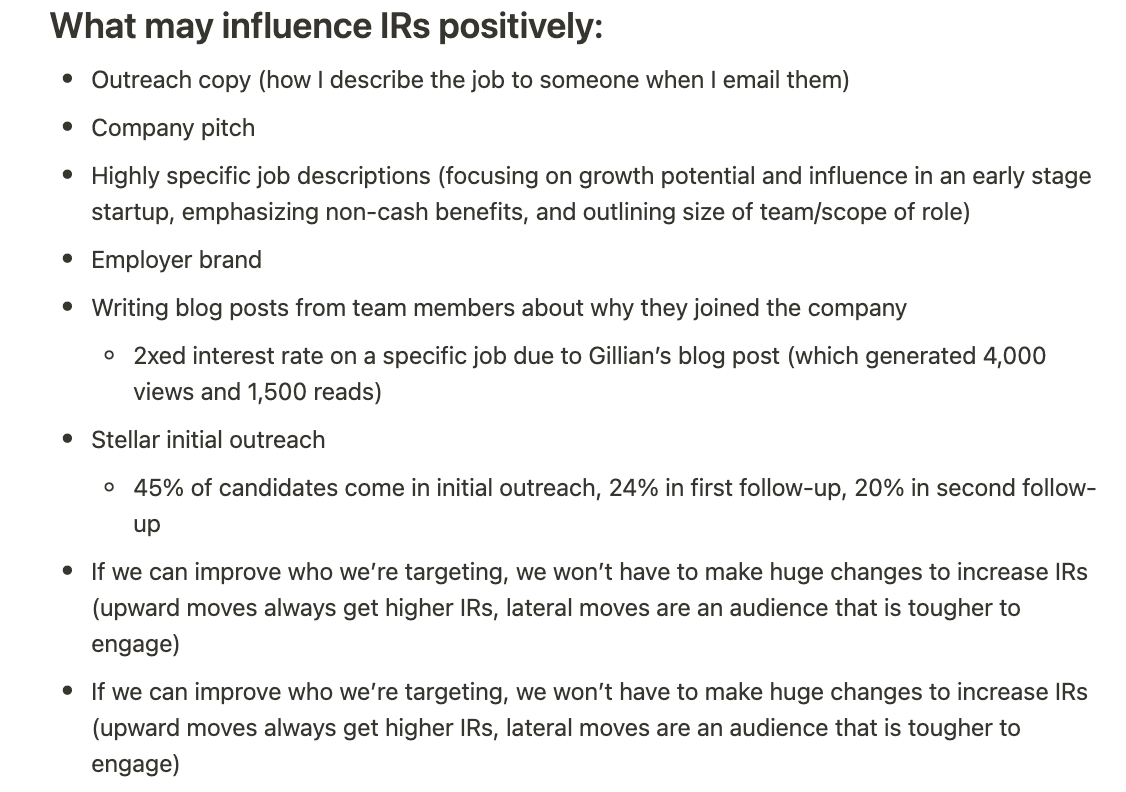

I came up with two lists — what may influence interest rates positively and what may influence them negatively. Here are the actual screenshots:

Each department pulled the levers that they could. Our marketing team was tasked with employer branding and intial cold recruiting emails. As our content lead, I was tasked with figuring out if we could improve our autogenerated recruiting emails to get better interest and response rates.

Ultimately, I decided to go all in on outreach. Even though we attempted to help customers with their employer branding through a few experiments, we realized that outreach was the only lever we could scientifically measure, control, and ensure quality for.

The early days of Dover outreach

Compared to human outreach, Dover's automated outreach is highly effective. It pulls information from job descriptions and company pitches for personalized outbound emails in a way that no other tool on the market does so far.

Compared to human outreach, Dover's automated outreach is highly effective. It pulls information from job descriptions and company pitches for personalized outbound emails in a way that no other tool on the market does so far. Why these four? They were things we'd anecdotally seen raise interest rate across different roles.

At Dover, studies are nothing unusual - we rely heavily on data to ensure we're providing the most value in our software. And we never incorporate research into our products unless we completely validate it.

The results were too interesting not to share, and the findings have completely reinvented the way we allow customers to test and iterate on outreach in the app.

The hypothesis

My ultimate goal was to understand whether changing the email body itself really produced any tangible effect on interest rates in the recruitment process or if it was beholden to market conditions. My hypothesis was simple: outreach must have some impact since it was the first touchpoint candidates have with an organization.

The limitations

Since what appeals to one candidate might not appeal to all, I wanted to use data to explain behavior by looking at patterns and measuring for statistical significance.

Dover has tons of data from more than 500 companies across thousands of roles. That being said, if candidates never apply, I can’t ask them anything, especially (and crucially) why. That left me with two main limitations that were unique to each individual who opens a cold email and doesn’t respond:

Outreach content has some mix of job-specific and company-specific information — I don’t know if candidates have problems with the role or the company.

It’s hard to delineate between the impact of company-specific content versus the impact of the company’s overall reputation/employer brand (after all, candidates will Google you before hopping on a call).

There is no way to know how many recruitment emails a candidate received that day from other companies, how many companies they're already talking to, etc.

Knowing this, I decided to try to control for external variables as best I could (I segmented experiment subjects into groups by role type, company size, and existing interest rate) and iterated on each experiment at least three times to validate my results.

So, what did I find?

For the most part, my instincts were wrong. Which is exactly why we do research!

TL;DR: I found that concise language, and vague subject lines (more on that later) had a more significant impact on interest rate than I imagined (like 2x more), while the two other categories, providing links and listing non-cash benefits, had negligible (and, in some cases, negative) impact.

We’ll go over the 4 major experiment categories we tested, including the 5th one which ended up being our magic bullet. The variables included subject lines, follow-up email timing, email structure (using incomplete sentences or bullet points), linking to LinkedIn profiles and job descriptions, and mentioning non-cash benefits (like perks, stipends, and PTO).

The experiments

Study mechanics

Who: 200 roles with low interest rates (3% or lower than benchmark).

What: In each study I performed an A/B test that took our autogenerated outreach and ran it against the new variable I was testing.

When: The tests ran for two weeks each.

How: For each experiment, I did three to five iterations. In many cases, I took outliers, or roles that underperformed compared to the rest of the test group, and put them in different experiments or iterations to see if I could yield different results.

Controls: I tried to maintain similar role types in the same experiment group so that I could control as much as I could for the limitations talked about above (i.e. I assumed that broadly, design folks would probably assess companies similarly, as would engineers, AEs, etc.).

The winners

Experiment #1: Subject lines

Test A: Adding reading time to a subject line.

Test B: Adding series funding to a subject line.

Test C: Using a vague subject line — specifically “[First Name], looking for something new?”

Results: Test C produced a 25% increase in interest rate across 80% of test subjects. Both Test A and Test B produced negligible results.

The takeaway

Surprisingly, vague subject lines appealed more to candidates than hyper-specific, hyper-personalized ones. It makes sense — other recruiting tools and automation platforms were beginning to mimic each other’s style, and anyone’s eyes would scroll past a line they’ve heard time and time again.

✨ Experiment #2: Bullet point structure - the standout

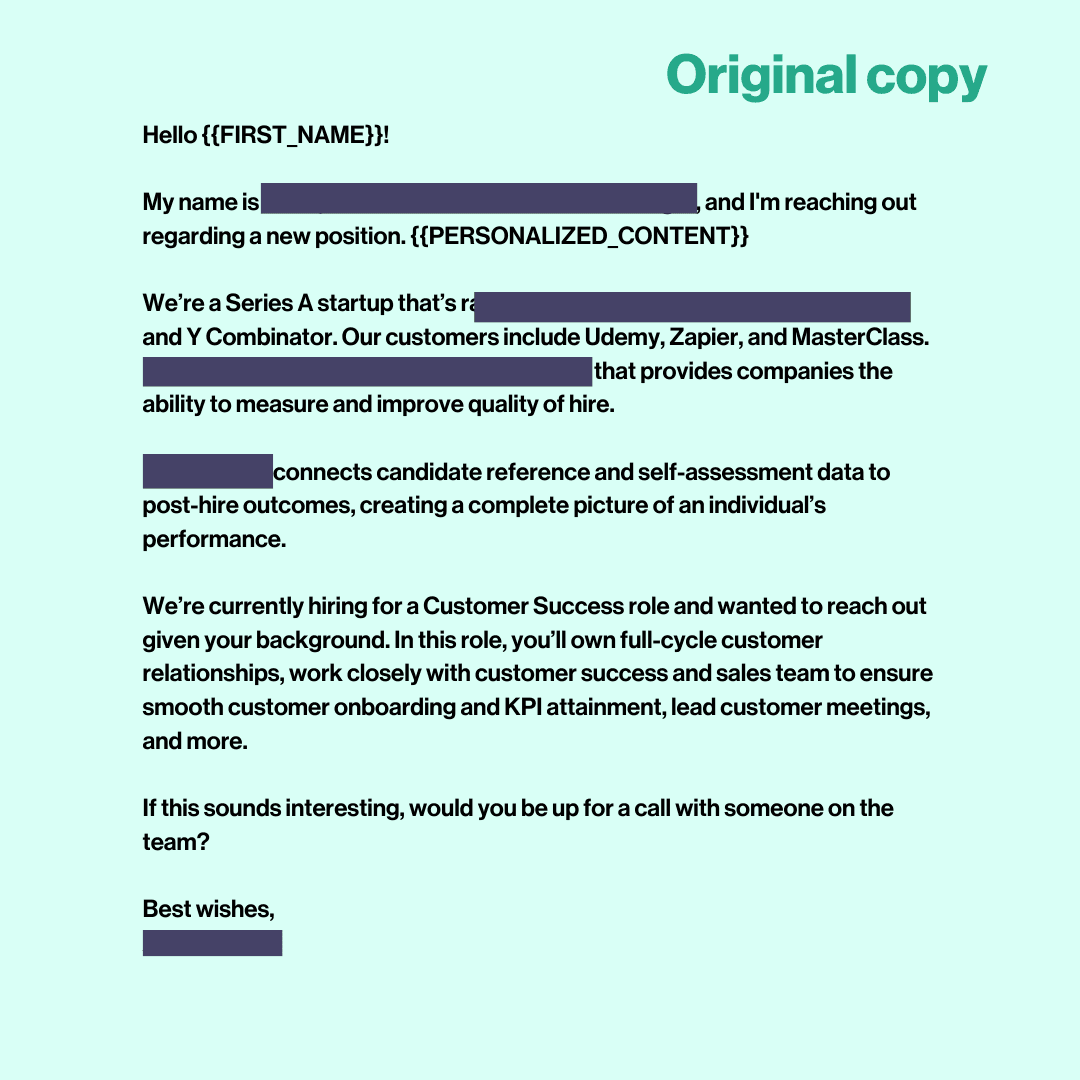

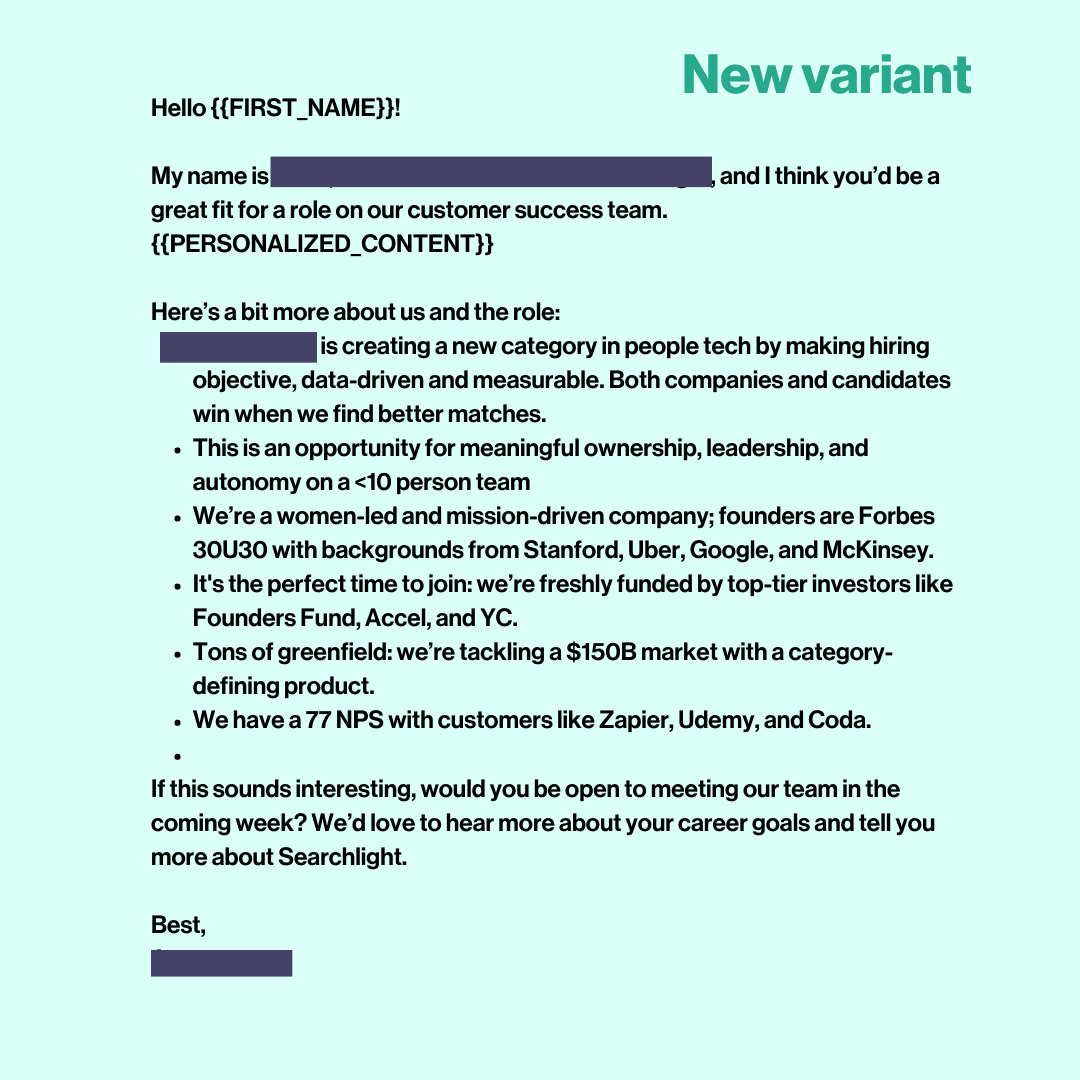

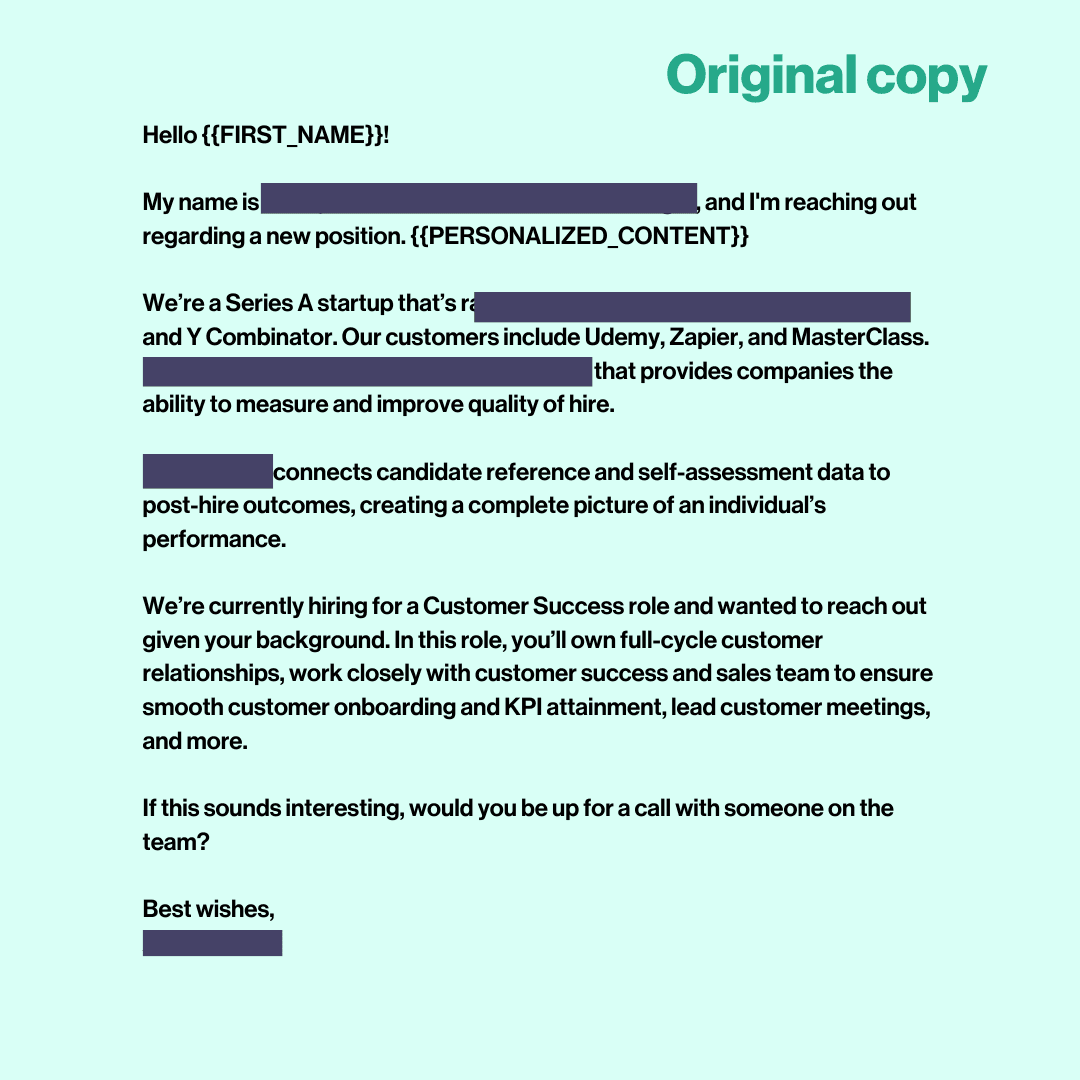

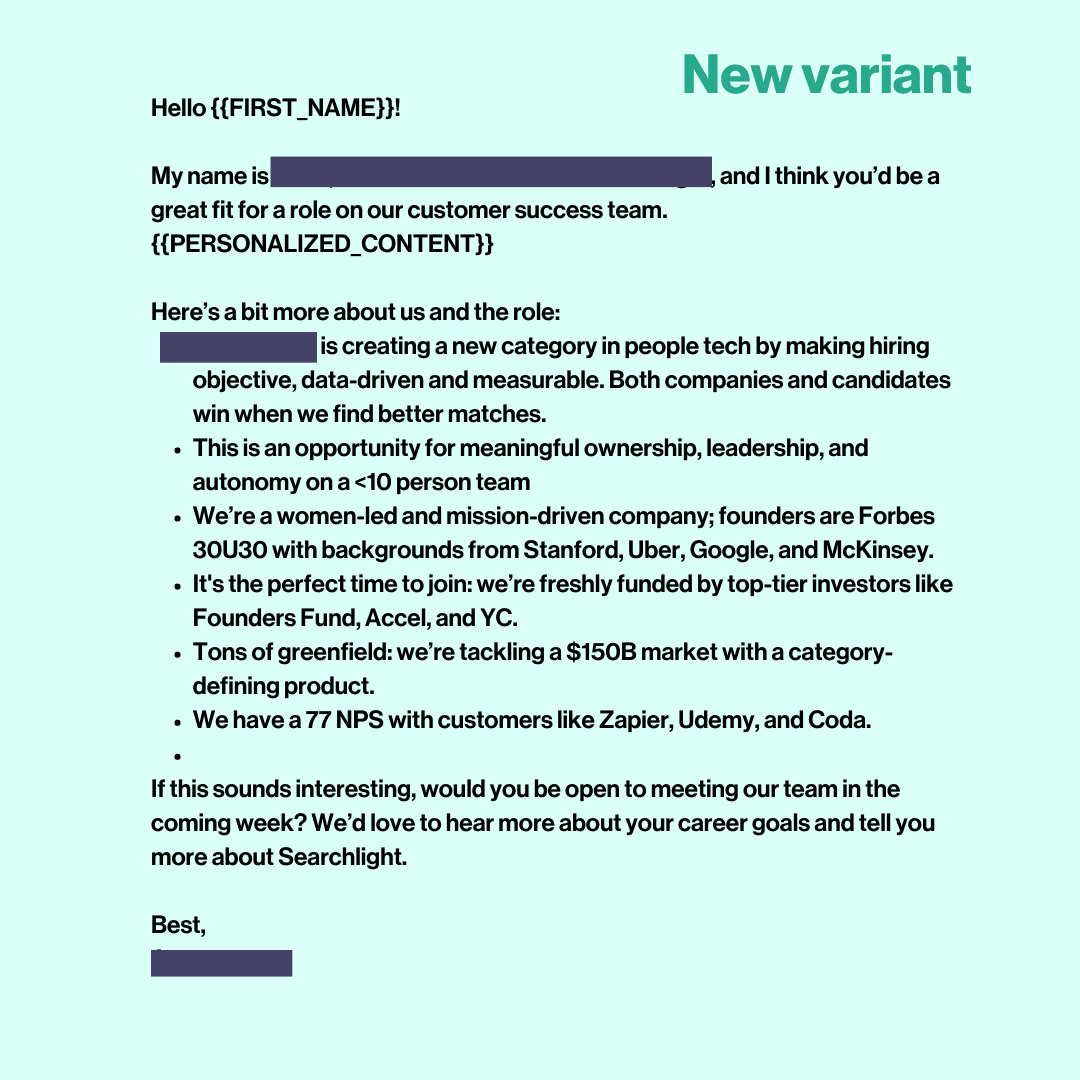

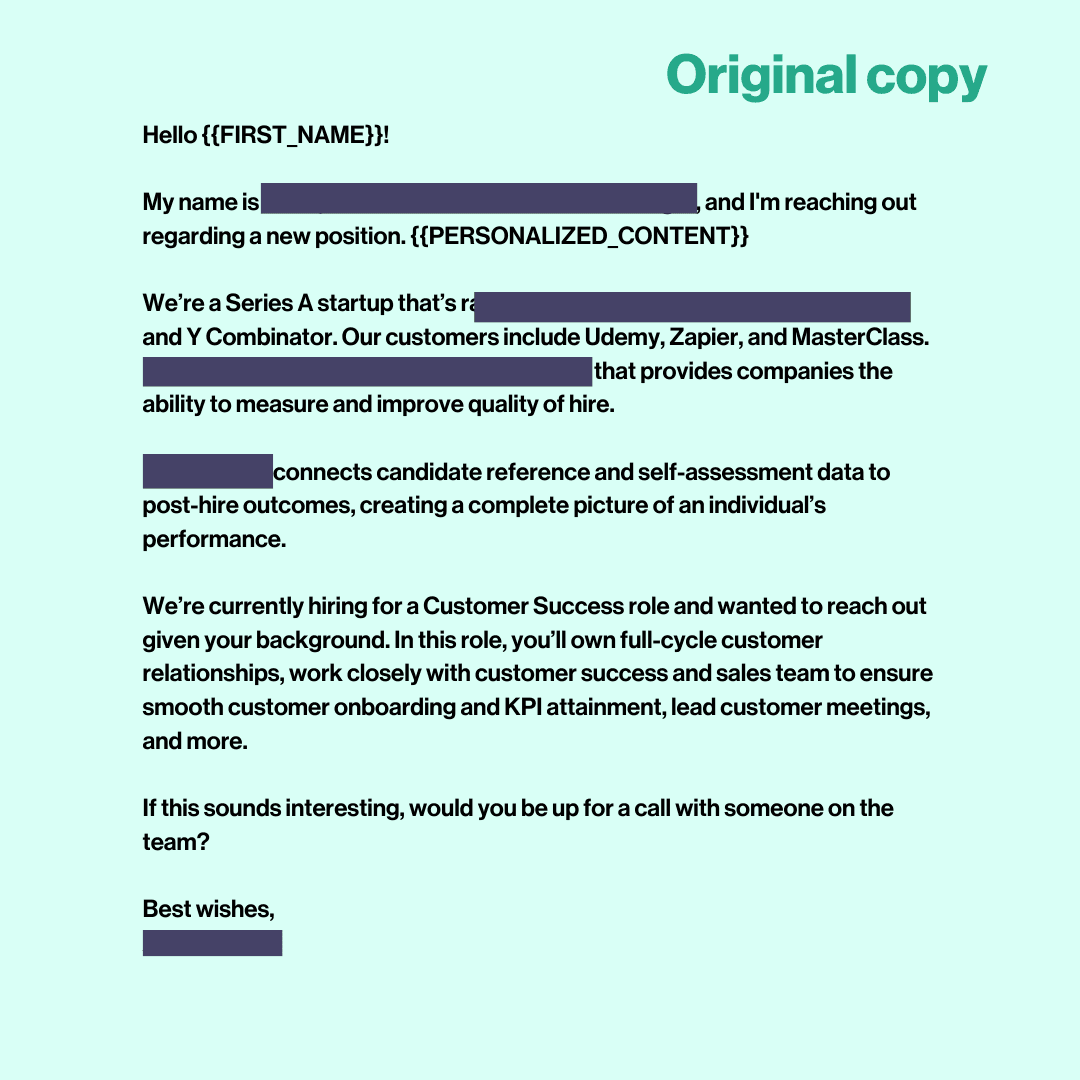

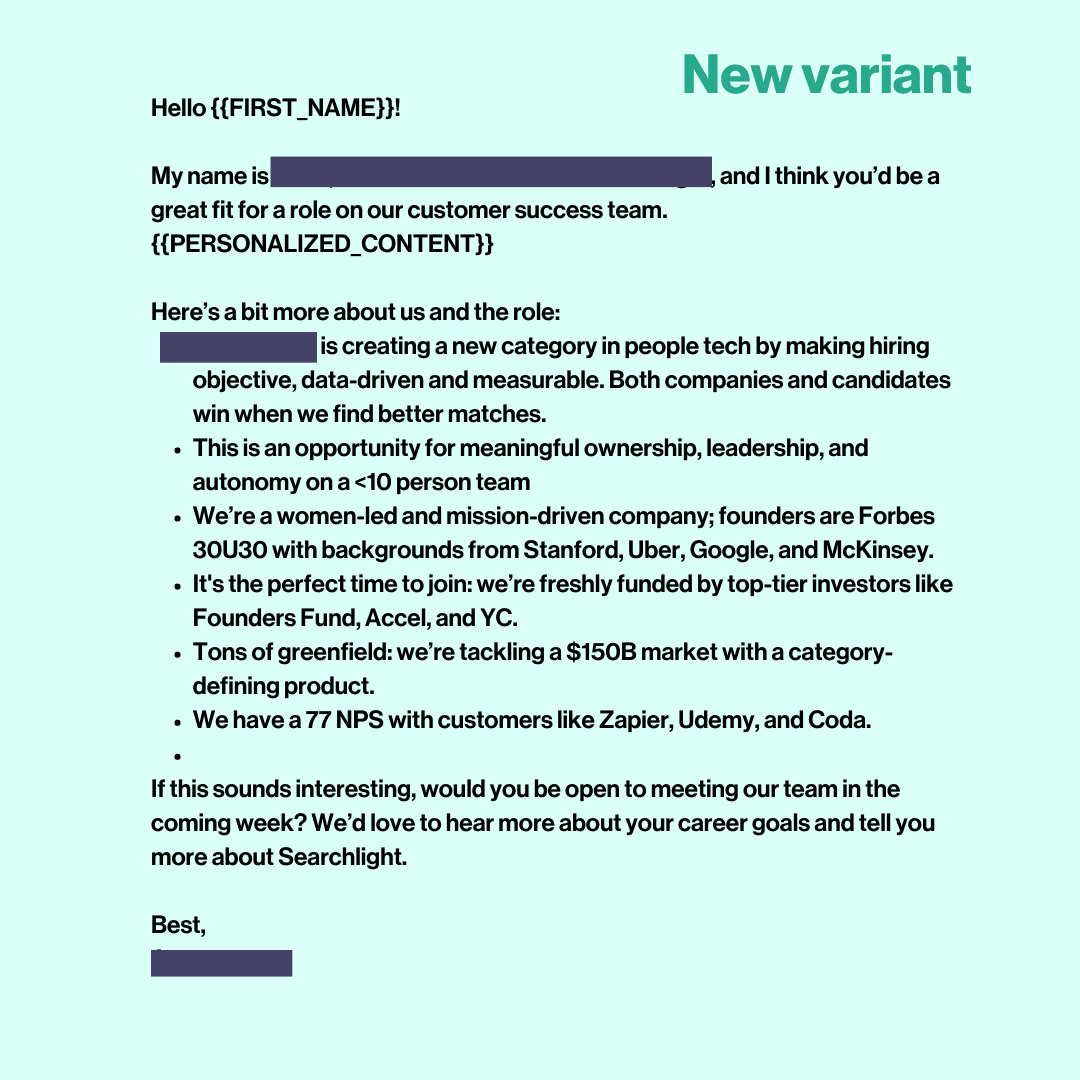

What: Rewrite outreach to be more concise, in a bullet point format for better readability. Also, pass the “scroll” test on mobile - or, you only need to scroll once to read the entire email.

Examples:

Results: On average, this variant 1.35x’d the interest rate. To put that into context, this yields an additional 1-2 candidates/wk, or a total of **+14-16 candidates over the lifetime of the job if run through Dover. One more time: that’s 14 to 16 more people who raise their hand and say they’re interested in a role and company!

The takeaway

It's reasonable to assume that mobile readability/brevity/concise language is key for highly in-demand roles (like those in product and engineering). The bullet point variant is one we quickly rolled out across all low-interest roles in the platform. It has become a Queens Gambit-level chess move for raising open/response and interest rates across the board.

The losers

Experiment #3: Listing non-cash benefits in outreach

What: With a crowded market and candidates inundated with options, I thought that maybe candidates would be compelled by non-cash benefits more than the specifics of a job, or a company.

Results: No lift, and in some cases, a negative effect. Don’t worry, I quickly shut those down. :)

Experiment #4: Linking to LinkedIn profiles, websites, and job descriptions.

What: Throughout this process, I discovered small one-off variants across the org that outperformed on a massive scale (interest rates were >8% in some cases), and decided to test these patterns in these experiments. One of those findings was that linking to LinkedIn profiles of the email sender (as well as the website, and job description) increased interest rates slightly, perhaps, we guessed, because candidates thought they were talking to someone more “legit” or “credible” and not an automation tool.

Results: Neutral - no change across the board. Sometimes a fluke is a fluke.

The big big takeaway + aka the magic 🪄

Experiment #4: Combining winning elements

What: I took the winning bullet point template and the top subject line (for a refresher, that was “[First Name], looking for something new?”) and combined them to see if putting these two winners together could produce an even greater lift. Long story short: it did, by a whole lot.

Results: Combining the bullet point structure plus a winning subject line increased interest rates by more than 50%.

Here’s the recipe, so you can test it out across your open roles.

💡 Template

Hi {{FIRST_NAME}},

I’m {{HM}}, the {{role}} at {{company}}. {{Personalized_Content}} I thought you’d be a great addition to our team as a [role].

Here's a bit more about us and this opportunity:

- Mission statement

- Funding: $$ in Series X funding led by top investors: (list here)

- Being an early engineering hire means: adjective, adjective, adjective

- You'll work with {{departments}}

- Tech stack (for eng), design program (for product)

If you're interested, would you have 30 minutes in the next week to chat?

Best,

John Doe

What happened after

Overall, I learned that refreshing our outreach strategy, running experiments, and testing new and creative types of content is key to staying competitive in a crowded hiring market.

With the experimental framework already in place, our product team built an A/B test feature into our app that allows hiring teams to create a new copy variant when interest rates drop below benchmark in their hiring process. With even more outreach email data at our fingertips, we can iterate quickly and spread best practices or positive indicators across the org rapidly. Download our Great Recruiting Emails e-book for more inspiration.

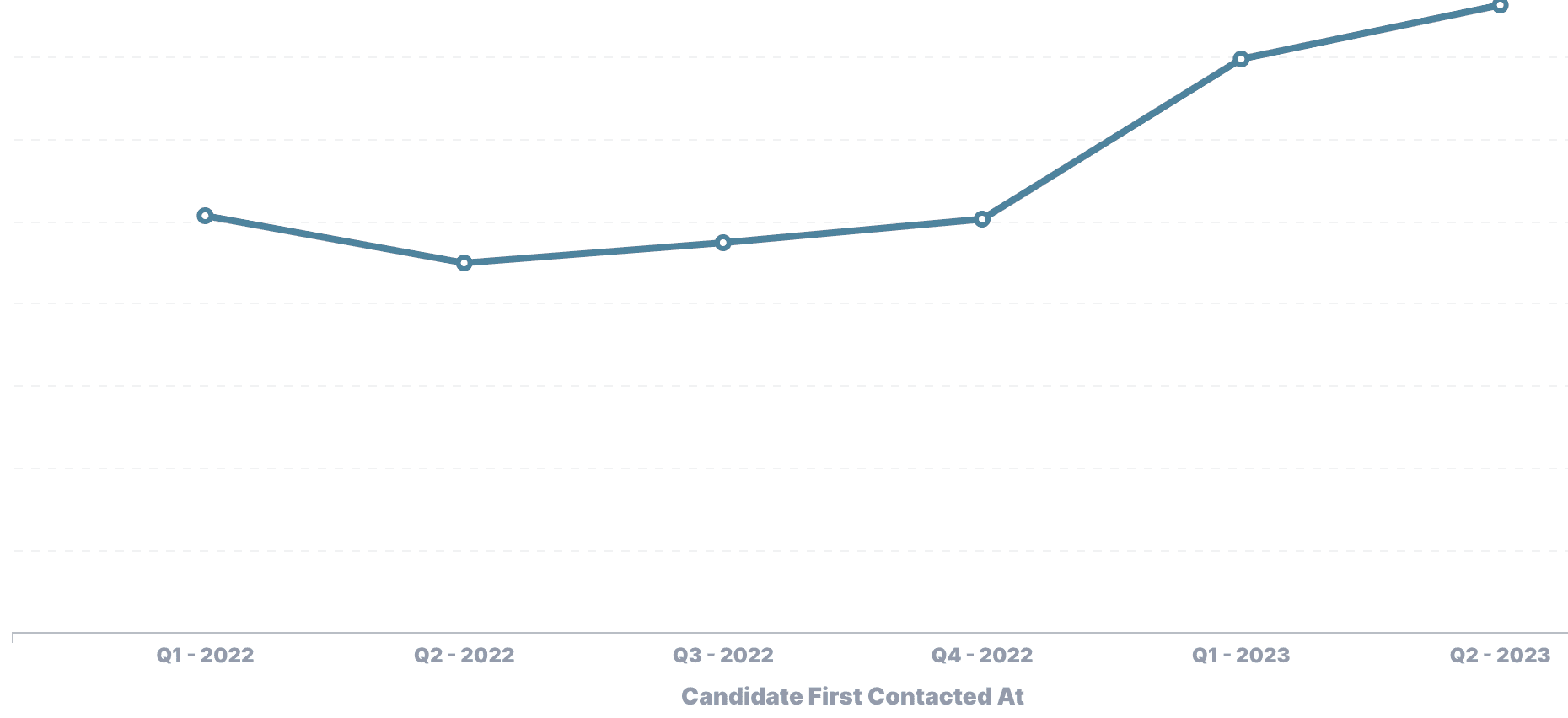

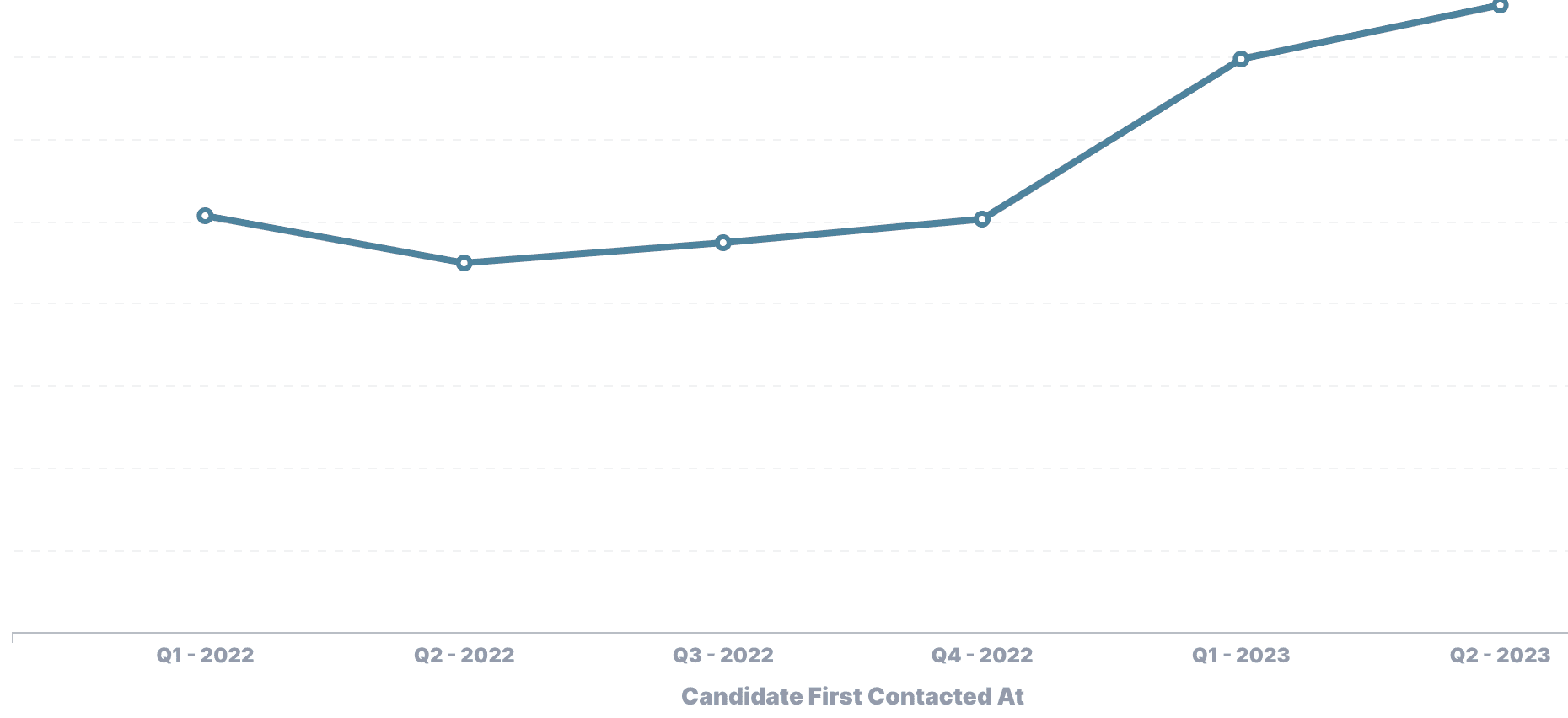

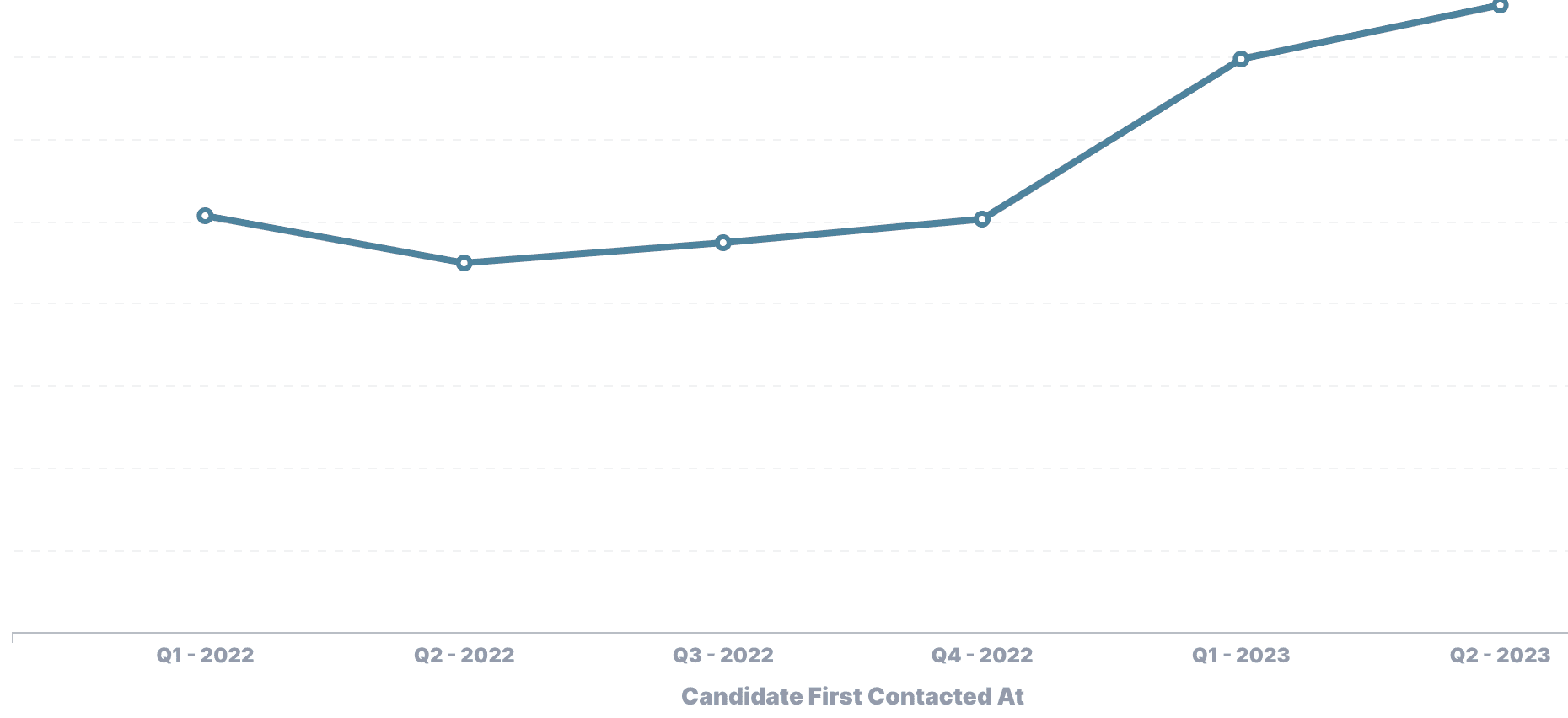

Since the dip in Q1 of 2022, we’ve seen an increase in interest rates across all roles!

Want someone else to write the emails for you?

Dover does a lot more than that — we give you actionable data, supercharge your team’s workflows, and enable you to engage talent at scale so you can deliver an amazing candidate experience.

Sign up here or email us a hello@dover.com.

I came up with two lists — what may influence interest rates positively and what may influence them negatively. Here are the actual screenshots:

Each department pulled the levers that they could. Our marketing team was tasked with employer branding and intial cold recruiting emails. As our content lead, I was tasked with figuring out if we could improve our autogenerated recruiting emails to get better interest and response rates.

Ultimately, I decided to go all in on outreach. Even though we attempted to help customers with their employer branding through a few experiments, we realized that outreach was the only lever we could scientifically measure, control, and ensure quality for.

The early days of Dover outreach

Compared to human outreach, Dover's automated outreach is highly effective. It pulls information from job descriptions and company pitches for personalized outbound emails in a way that no other tool on the market does so far.

Compared to human outreach, Dover's automated outreach is highly effective. It pulls information from job descriptions and company pitches for personalized outbound emails in a way that no other tool on the market does so far. Why these four? They were things we'd anecdotally seen raise interest rate across different roles.

At Dover, studies are nothing unusual - we rely heavily on data to ensure we're providing the most value in our software. And we never incorporate research into our products unless we completely validate it.

The results were too interesting not to share, and the findings have completely reinvented the way we allow customers to test and iterate on outreach in the app.

The hypothesis

My ultimate goal was to understand whether changing the email body itself really produced any tangible effect on interest rates in the recruitment process or if it was beholden to market conditions. My hypothesis was simple: outreach must have some impact since it was the first touchpoint candidates have with an organization.

The limitations

Since what appeals to one candidate might not appeal to all, I wanted to use data to explain behavior by looking at patterns and measuring for statistical significance.

Dover has tons of data from more than 500 companies across thousands of roles. That being said, if candidates never apply, I can’t ask them anything, especially (and crucially) why. That left me with two main limitations that were unique to each individual who opens a cold email and doesn’t respond:

Outreach content has some mix of job-specific and company-specific information — I don’t know if candidates have problems with the role or the company.

It’s hard to delineate between the impact of company-specific content versus the impact of the company’s overall reputation/employer brand (after all, candidates will Google you before hopping on a call).

There is no way to know how many recruitment emails a candidate received that day from other companies, how many companies they're already talking to, etc.

Knowing this, I decided to try to control for external variables as best I could (I segmented experiment subjects into groups by role type, company size, and existing interest rate) and iterated on each experiment at least three times to validate my results.

So, what did I find?

For the most part, my instincts were wrong. Which is exactly why we do research!

TL;DR: I found that concise language, and vague subject lines (more on that later) had a more significant impact on interest rate than I imagined (like 2x more), while the two other categories, providing links and listing non-cash benefits, had negligible (and, in some cases, negative) impact.

We’ll go over the 4 major experiment categories we tested, including the 5th one which ended up being our magic bullet. The variables included subject lines, follow-up email timing, email structure (using incomplete sentences or bullet points), linking to LinkedIn profiles and job descriptions, and mentioning non-cash benefits (like perks, stipends, and PTO).

The experiments

Study mechanics

Who: 200 roles with low interest rates (3% or lower than benchmark).

What: In each study I performed an A/B test that took our autogenerated outreach and ran it against the new variable I was testing.

When: The tests ran for two weeks each.

How: For each experiment, I did three to five iterations. In many cases, I took outliers, or roles that underperformed compared to the rest of the test group, and put them in different experiments or iterations to see if I could yield different results.

Controls: I tried to maintain similar role types in the same experiment group so that I could control as much as I could for the limitations talked about above (i.e. I assumed that broadly, design folks would probably assess companies similarly, as would engineers, AEs, etc.).

The winners

Experiment #1: Subject lines

Test A: Adding reading time to a subject line.

Test B: Adding series funding to a subject line.

Test C: Using a vague subject line — specifically “[First Name], looking for something new?”

Results: Test C produced a 25% increase in interest rate across 80% of test subjects. Both Test A and Test B produced negligible results.

The takeaway

Surprisingly, vague subject lines appealed more to candidates than hyper-specific, hyper-personalized ones. It makes sense — other recruiting tools and automation platforms were beginning to mimic each other’s style, and anyone’s eyes would scroll past a line they’ve heard time and time again.

✨ Experiment #2: Bullet point structure - the standout

What: Rewrite outreach to be more concise, in a bullet point format for better readability. Also, pass the “scroll” test on mobile - or, you only need to scroll once to read the entire email.

Examples:

Results: On average, this variant 1.35x’d the interest rate. To put that into context, this yields an additional 1-2 candidates/wk, or a total of **+14-16 candidates over the lifetime of the job if run through Dover. One more time: that’s 14 to 16 more people who raise their hand and say they’re interested in a role and company!

The takeaway

It's reasonable to assume that mobile readability/brevity/concise language is key for highly in-demand roles (like those in product and engineering). The bullet point variant is one we quickly rolled out across all low-interest roles in the platform. It has become a Queens Gambit-level chess move for raising open/response and interest rates across the board.

The losers

Experiment #3: Listing non-cash benefits in outreach

What: With a crowded market and candidates inundated with options, I thought that maybe candidates would be compelled by non-cash benefits more than the specifics of a job, or a company.

Results: No lift, and in some cases, a negative effect. Don’t worry, I quickly shut those down. :)

Experiment #4: Linking to LinkedIn profiles, websites, and job descriptions.

What: Throughout this process, I discovered small one-off variants across the org that outperformed on a massive scale (interest rates were >8% in some cases), and decided to test these patterns in these experiments. One of those findings was that linking to LinkedIn profiles of the email sender (as well as the website, and job description) increased interest rates slightly, perhaps, we guessed, because candidates thought they were talking to someone more “legit” or “credible” and not an automation tool.

Results: Neutral - no change across the board. Sometimes a fluke is a fluke.

The big big takeaway + aka the magic 🪄

Experiment #4: Combining winning elements

What: I took the winning bullet point template and the top subject line (for a refresher, that was “[First Name], looking for something new?”) and combined them to see if putting these two winners together could produce an even greater lift. Long story short: it did, by a whole lot.

Results: Combining the bullet point structure plus a winning subject line increased interest rates by more than 50%.

Here’s the recipe, so you can test it out across your open roles.

💡 Template

Hi {{FIRST_NAME}},

I’m {{HM}}, the {{role}} at {{company}}. {{Personalized_Content}} I thought you’d be a great addition to our team as a [role].

Here's a bit more about us and this opportunity:

- Mission statement

- Funding: $$ in Series X funding led by top investors: (list here)

- Being an early engineering hire means: adjective, adjective, adjective

- You'll work with {{departments}}

- Tech stack (for eng), design program (for product)

If you're interested, would you have 30 minutes in the next week to chat?

Best,

John Doe

What happened after

Overall, I learned that refreshing our outreach strategy, running experiments, and testing new and creative types of content is key to staying competitive in a crowded hiring market.

With the experimental framework already in place, our product team built an A/B test feature into our app that allows hiring teams to create a new copy variant when interest rates drop below benchmark in their hiring process. With even more outreach email data at our fingertips, we can iterate quickly and spread best practices or positive indicators across the org rapidly. Download our Great Recruiting Emails e-book for more inspiration.

Since the dip in Q1 of 2022, we’ve seen an increase in interest rates across all roles!

Want someone else to write the emails for you?

Dover does a lot more than that — we give you actionable data, supercharge your team’s workflows, and enable you to engage talent at scale so you can deliver an amazing candidate experience.

Sign up here or email us a hello@dover.com.

I came up with two lists — what may influence interest rates positively and what may influence them negatively. Here are the actual screenshots:

Each department pulled the levers that they could. Our marketing team was tasked with employer branding and intial cold recruiting emails. As our content lead, I was tasked with figuring out if we could improve our autogenerated recruiting emails to get better interest and response rates.

Ultimately, I decided to go all in on outreach. Even though we attempted to help customers with their employer branding through a few experiments, we realized that outreach was the only lever we could scientifically measure, control, and ensure quality for.

The early days of Dover outreach

Compared to human outreach, Dover's automated outreach is highly effective. It pulls information from job descriptions and company pitches for personalized outbound emails in a way that no other tool on the market does so far.

Compared to human outreach, Dover's automated outreach is highly effective. It pulls information from job descriptions and company pitches for personalized outbound emails in a way that no other tool on the market does so far. Why these four? They were things we'd anecdotally seen raise interest rate across different roles.

At Dover, studies are nothing unusual - we rely heavily on data to ensure we're providing the most value in our software. And we never incorporate research into our products unless we completely validate it.

The results were too interesting not to share, and the findings have completely reinvented the way we allow customers to test and iterate on outreach in the app.

The hypothesis

My ultimate goal was to understand whether changing the email body itself really produced any tangible effect on interest rates in the recruitment process or if it was beholden to market conditions. My hypothesis was simple: outreach must have some impact since it was the first touchpoint candidates have with an organization.

The limitations

Since what appeals to one candidate might not appeal to all, I wanted to use data to explain behavior by looking at patterns and measuring for statistical significance.

Dover has tons of data from more than 500 companies across thousands of roles. That being said, if candidates never apply, I can’t ask them anything, especially (and crucially) why. That left me with two main limitations that were unique to each individual who opens a cold email and doesn’t respond:

Outreach content has some mix of job-specific and company-specific information — I don’t know if candidates have problems with the role or the company.

It’s hard to delineate between the impact of company-specific content versus the impact of the company’s overall reputation/employer brand (after all, candidates will Google you before hopping on a call).

There is no way to know how many recruitment emails a candidate received that day from other companies, how many companies they're already talking to, etc.

Knowing this, I decided to try to control for external variables as best I could (I segmented experiment subjects into groups by role type, company size, and existing interest rate) and iterated on each experiment at least three times to validate my results.

So, what did I find?

For the most part, my instincts were wrong. Which is exactly why we do research!

TL;DR: I found that concise language, and vague subject lines (more on that later) had a more significant impact on interest rate than I imagined (like 2x more), while the two other categories, providing links and listing non-cash benefits, had negligible (and, in some cases, negative) impact.

We’ll go over the 4 major experiment categories we tested, including the 5th one which ended up being our magic bullet. The variables included subject lines, follow-up email timing, email structure (using incomplete sentences or bullet points), linking to LinkedIn profiles and job descriptions, and mentioning non-cash benefits (like perks, stipends, and PTO).

The experiments

Study mechanics

Who: 200 roles with low interest rates (3% or lower than benchmark).

What: In each study I performed an A/B test that took our autogenerated outreach and ran it against the new variable I was testing.

When: The tests ran for two weeks each.

How: For each experiment, I did three to five iterations. In many cases, I took outliers, or roles that underperformed compared to the rest of the test group, and put them in different experiments or iterations to see if I could yield different results.

Controls: I tried to maintain similar role types in the same experiment group so that I could control as much as I could for the limitations talked about above (i.e. I assumed that broadly, design folks would probably assess companies similarly, as would engineers, AEs, etc.).

The winners

Experiment #1: Subject lines

Test A: Adding reading time to a subject line.

Test B: Adding series funding to a subject line.

Test C: Using a vague subject line — specifically “[First Name], looking for something new?”

Results: Test C produced a 25% increase in interest rate across 80% of test subjects. Both Test A and Test B produced negligible results.

The takeaway

Surprisingly, vague subject lines appealed more to candidates than hyper-specific, hyper-personalized ones. It makes sense — other recruiting tools and automation platforms were beginning to mimic each other’s style, and anyone’s eyes would scroll past a line they’ve heard time and time again.

✨ Experiment #2: Bullet point structure - the standout

What: Rewrite outreach to be more concise, in a bullet point format for better readability. Also, pass the “scroll” test on mobile - or, you only need to scroll once to read the entire email.

Examples:

Results: On average, this variant 1.35x’d the interest rate. To put that into context, this yields an additional 1-2 candidates/wk, or a total of **+14-16 candidates over the lifetime of the job if run through Dover. One more time: that’s 14 to 16 more people who raise their hand and say they’re interested in a role and company!

The takeaway

It's reasonable to assume that mobile readability/brevity/concise language is key for highly in-demand roles (like those in product and engineering). The bullet point variant is one we quickly rolled out across all low-interest roles in the platform. It has become a Queens Gambit-level chess move for raising open/response and interest rates across the board.

The losers

Experiment #3: Listing non-cash benefits in outreach

What: With a crowded market and candidates inundated with options, I thought that maybe candidates would be compelled by non-cash benefits more than the specifics of a job, or a company.

Results: No lift, and in some cases, a negative effect. Don’t worry, I quickly shut those down. :)

Experiment #4: Linking to LinkedIn profiles, websites, and job descriptions.

What: Throughout this process, I discovered small one-off variants across the org that outperformed on a massive scale (interest rates were >8% in some cases), and decided to test these patterns in these experiments. One of those findings was that linking to LinkedIn profiles of the email sender (as well as the website, and job description) increased interest rates slightly, perhaps, we guessed, because candidates thought they were talking to someone more “legit” or “credible” and not an automation tool.

Results: Neutral - no change across the board. Sometimes a fluke is a fluke.

The big big takeaway + aka the magic 🪄

Experiment #4: Combining winning elements

What: I took the winning bullet point template and the top subject line (for a refresher, that was “[First Name], looking for something new?”) and combined them to see if putting these two winners together could produce an even greater lift. Long story short: it did, by a whole lot.

Results: Combining the bullet point structure plus a winning subject line increased interest rates by more than 50%.

Here’s the recipe, so you can test it out across your open roles.

💡 Template

Hi {{FIRST_NAME}},

I’m {{HM}}, the {{role}} at {{company}}. {{Personalized_Content}} I thought you’d be a great addition to our team as a [role].

Here's a bit more about us and this opportunity:

- Mission statement

- Funding: $$ in Series X funding led by top investors: (list here)

- Being an early engineering hire means: adjective, adjective, adjective

- You'll work with {{departments}}

- Tech stack (for eng), design program (for product)

If you're interested, would you have 30 minutes in the next week to chat?

Best,

John Doe

What happened after

Overall, I learned that refreshing our outreach strategy, running experiments, and testing new and creative types of content is key to staying competitive in a crowded hiring market.

With the experimental framework already in place, our product team built an A/B test feature into our app that allows hiring teams to create a new copy variant when interest rates drop below benchmark in their hiring process. With even more outreach email data at our fingertips, we can iterate quickly and spread best practices or positive indicators across the org rapidly. Download our Great Recruiting Emails e-book for more inspiration.

Since the dip in Q1 of 2022, we’ve seen an increase in interest rates across all roles!

Want someone else to write the emails for you?

Dover does a lot more than that — we give you actionable data, supercharge your team’s workflows, and enable you to engage talent at scale so you can deliver an amazing candidate experience.

Sign up here or email us a hello@dover.com.

Kickstart recruiting with Dover's Recruiting Partners

Kickstart recruiting with Dover's Recruiting Partners

Kickstart recruiting with Dover's Recruiting Partners